PP-YOLO Object Detection Algorithm: Why It’s Faster than YOLOv4 [2021 UPDATED]

Object detection research has hit its stride in 2021 with state-of-the-art frameworks including YOLOv5, Scaled YOLOv4, PP-YOLO, and now PP-YOLOv2 from Baidu. PP-YOLO2 builds upon the PP-YOLO framework, with some important improvements. The authors added Path Aggregation Network, mish activation function, increased image input size, and fine-tuned YOLO’s loss function. That means minimizing data loss from overlapping bounding boxes. Maximize your object detection model by choosing the right YOLO algorithm framework for your model. Continue reading to learn about PP-YOLO and the YOLO algorithm for object detection.

Introduction to PP-YOLO

PP-YOLO (or PaddlePaddle YOLO) is a machine learning object detection framework based on the YOLO (You Only Look Once) object detection algorithm. PP-YOLO is not a new kind of object detection framework. Rather, PP-YOLO is a modified version of YOLOv4 with an improved inference speed and mAP score. These improvements are achieved by using a RESNET-50 backbone architecture and additional enhancements such as larger batch size, Dropblock, IOU Loss, and pre-trained models. In this article, we’ll explain fully what PP-YOLO is, why it is an improvement over YOLOv4, and show you how to use PP-YOLO for object detection.

- What is Object Detection?

- YOLO and PP-YOLO Explained

- PP-YOLO: Structure

- How to Use PP-YOLO

- 5 Reasons PP-YOLO is an Improvement Over YOLOv4

- Impact on Framerate, mAP, and Inference Time

- Summary

What is Object Detection?

The human brain perceives the world and makes sense of its environment instantly. The two common tasks that the human visionary system does especially well are classifying and localizing objects. Imagine you are driving a car. Detecting objects in your surrounding environment is a trivial task. You have no trouble at all differentiating the cars and road signs around you from other objects and obstacles (unless, perhaps, you are a terrible driver). AI researchers and computer vision scientists have been working hard to make machines understand the world visually in the way that we humans do – instantaneously and accurately. This is what we refer to as object detection.

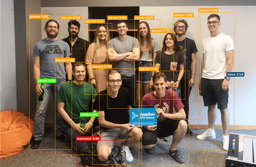

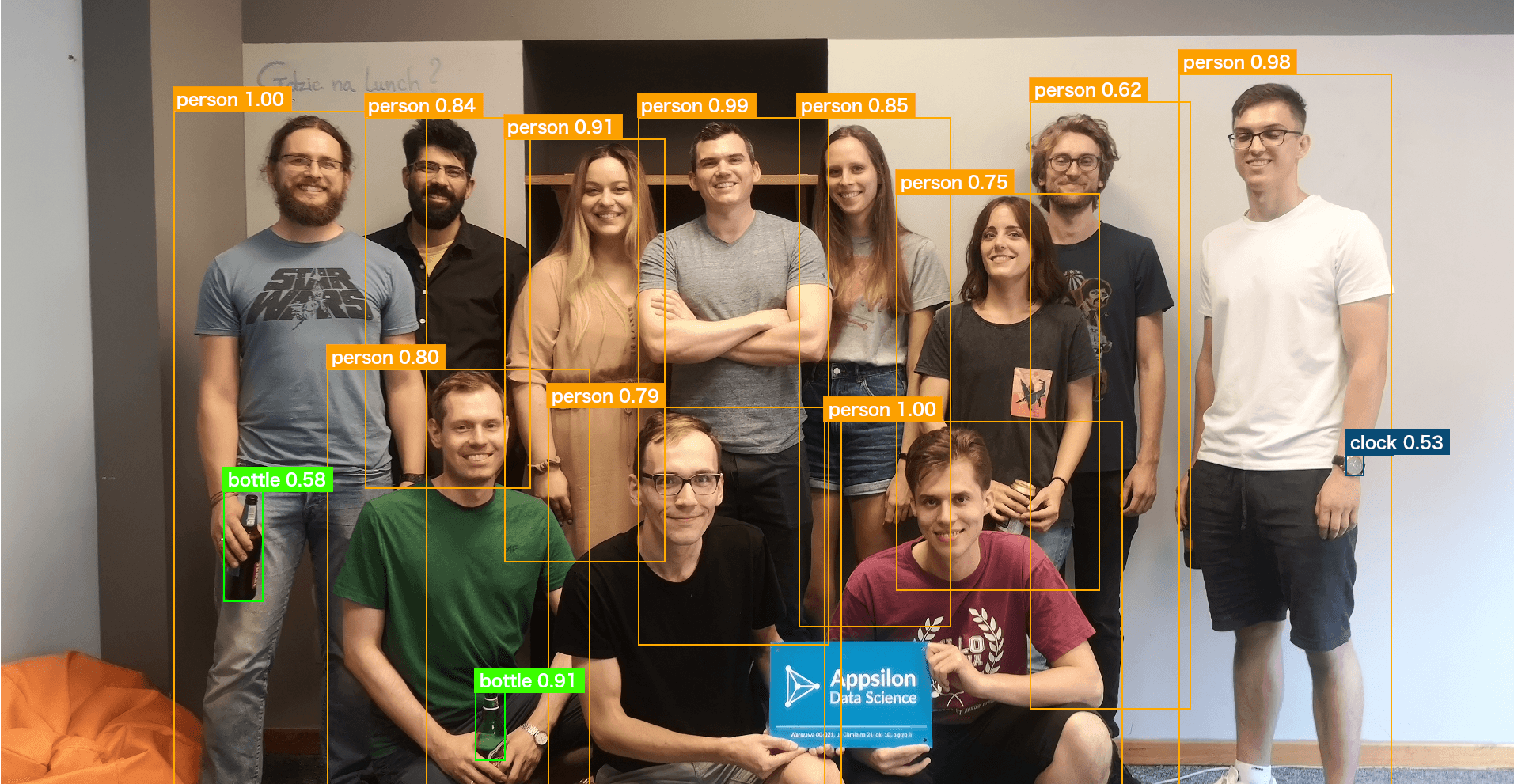

Object detection in action with Appsilon co-founder Damian Rodziewicz. The YOLO model predicts different types of objects in the photo with a high degree of accuracy.

YOLO Algorithm and PP-YOLO Explained

In our last post on object detection and the YOLO algorithm, we discussed:

- How Object Detection Works

- Differences Between One-stage and Two-stage Object Detectors

- Motivation Behind Using a One-stage Detector

We then explored the darknet framework and applied the YOLO algorithm to a test image in order to predict the bounding box coordinates of various objects (two people, two cups, a chair, a laptop, and a dining table).

See more with Appsilon’s Computer Vision and Custom ML Solutions.

We observed that there is a trade-off between one-stage and two-stage detectors in terms of inference speed and accuracy. One-shot object detectors have been widely adopted in the computer vision community and variants of these detectors have been developed to make it run not only on powerful GPU-enabled computers but also on small embedded devices.

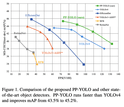

Recently researchers at BAIDU worked on improving the performance of the original YOLO framework by adapting it a bit and have reached better options balancing the efficiency and effectiveness of the network. As you can see from Figure 1 below (figure from Long et al., 2020) that PP-YOLO outperforms the start-of-the-art YOLOv4 both in terms of FPS and mAP by a significant margin.

Source: Long et al., 2020

The algorithm called PP-YOLO or PADDLE-PADDLE YOLO is not a new object detection framework but a recipe to improve inference speed and the mAP score.

PP-YOLO: Structure

A common paradigm amongst object detection frameworks has been to split the network into three essential components:

- Backbone Network

- Neck

- Detection Head

Backbone network: Different variants of backbone networks have been explored in the last couple of years; the most efficient and popular being the ResNet network (Deep Residual Learning for Image Recognition) which is quite a popular network architecture for object classification tasks. In the original YOLO V3 paper, the Darknet-53 architecture was used. However, since ResNet has been widely studied and comes bundled with pre-trained networks, the authors decided to choose ResNet as a backbone network architecture which alone provided a boost in both effectiveness and efficiency.

Neck: The neck of the network is where the network is made robust by making it scale and size invariant. Instead of augmenting the images, wouldn’t it be great if the network itself could learn variances in scale? FPN (Feature Pyramid Network) is a common choice for a feature extractor addition which works by stacking up features at different scales in a bottom-up fashion.

Detection Head: This is the final layer of the network which outputs the bounding box coordinates, class, and confidence score of the object.

Appsilon team members demonstrate PP-YOLO object detection.

How to Use PP-YOLO

Now that we have seen how the architecture of the network is designed, let us explore how you can get started with experimenting with a trained model on a toy example.

Setting up your machine:

In order to play around with PP-YOLO, you need to install the required dependencies and libraries. We will use a pre-built docker image provided by the authors of the Paddle Paddle deep learning framework.

Start by running the following command in your terminal window:

nvidia-docker pull hub.baidubce.com/paddlepaddle/paddle:1.8.4-gpu-cuda10.0-cudnn7

Once the docker image is pulled you can start using it.

Run the following command to start the docker container:

nvidia-docker run --name paddle -it -v $PWD:/paddle -v path_to_your_data:/data hub.baidubce.com/paddlepaddle/paddle:1.8.4-gpu-cuda10.0-cudnn7 /bin/bash

You will have to change the path to your own image folder. Just replace the path_to_your_data with your path.

Now, execute the following command to get the predictions on a test image:

CUDA_VISIBLE_DEVICES=0 python tools/infer.py -c configs/ppyolo/ppyolo.yml -o weights=

https://paddlemodels.bj.bcebos.com/object_detection/ppyolo.pdparams --infer_img=/data/my_image.jpg

Original Image

PP-YOLO Predicted Image

As you can see, the model was very accurate in classifying and localizing the three animals in the image. Two birds and one zebra. The model did not incorrectly identify the object behind the zebra as a bird or a group of birds.

5 Reasons PP-YOLO Is An Improvement Over YOLOv4

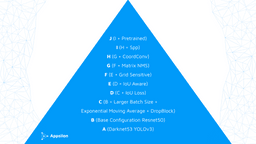

The authors of the paper experimented with 9 unique ways to tweak the base configuration to give a better performance. Let us explore 5 of these configurations:

Configuration Settings for the PP-YOLO FRAMEWORK

Batch Size

In order to improve the model performance, the authors of the paper experimented with a batch size of 192. Training the neural network with a larger batch size leads to a boost in the accuracy of the model by stabilizing the training process.

Exponential Moving Average

Exponential moving average algorithms are quite common in the financial analytics domain. Using a similar methodology, the authors improved the performance of the model by smoothing out the weights during the training phase. This process is also very memory efficient since at every update step only the value of the smoothing factor needs to be kept in memory. This value was set to 0.9998 in the paper.

Dropblock

One way to improve the accuracy of the model and ensure that the model does not overfit the training data is to use regularization techniques like adding noise or using a dropout layer. Dropout layers randomly drop or ignore some nodes while training the neural network. This process can be made even more robust by dropping neurons in a continuous region as opposed to doing it independently. This ensures that the model can generalize well on unseen data.

IoU Loss

Neural networks have a loss function to make the network learn and adapt. In the original YOLOv3 paper only the L1 loss was used and adapted for estimating the bounding box coordinates. IoU or Intersection over union indicates what percentage of the predicted box coordinates overlaps with the ground-truth bounding box coordinates. The authors from the BAIDU research team experimented with both types of losses in the training process. The authors of the paper decided to stick with the basic version of the IoU loss since it gives good results in estimating the mAP metric.

Pretrained Models

Using pre-trained models and transferring the knowledge learned from one domain to another domain is a common trick to improve the speed of training the model and the effectiveness of the model. The authors used a better set of pre-trained weights for the backbone architecture which allowed them to improve the final detection accuracy even more.

Frame Rate, mAP, and Inference Time

Let us now explore how the above methods impact the following:

- Frame Rate

- Mean Average Precision

- Inference Time

Frame Rate

Computer vision applications using edge hardware need to have very low latency in processing the individual frames extracted from a live video stream. Changing the backbone architecture from Darknet-53 to ResNet leads to a significant increase in the number of frames that can be processed per second. The other configurations applied later do slow the algorithm slightly, however, it remains significantly faster than the benchmark.

Mean Average Precision (mAP)

Let us now see how the mAP is affected by the change in the base configuration.

As you can see there is a 5.7% increase (that is a 14.6% relative improvement!) in the mAP score from the base configuration to the sophisticated configuration (denoted by J).

Inference Time

Building models and optimizing them to run as fast as possible requires a fair bit of engineering and tweaking. As you can see in the plot below, the time it takes for the model to make the predictions is drastically reduced from 17.2 ms with the original darknet architecture to 13.7 ms with ResNet as a backbone architecture and the other configurations applied.

Summary: PP-YOLO and YOLO Algorithm

Object detection frameworks have come a long way from two-stage detectors to one-stage detectors. Detecting objects with a higher frame rate without sacrificing too much of the model accuracy remains paramount. Particularly in industrial applications where the models need to run on edge devices with limited computational power. PP-YOLO does not introduce a new way of designing object detection models, however, it provides a valuable case study of which tricks known from a wider field of deep learning work well in the context of one-stage object detectors.

In some ways, this can be seen as a limitation of the currently proposed implementation of PaddlePaddle, which is not (yet?) in the mainstream of deep learning frameworks. It will be interesting to see how PP-YOLO performs in practice and whether it will draw more attention of practitioners to the PaddlePaddle framework itself. If the improvements do translate to better industrial solutions, it may also lead to the adoption of the proposed tweaks. Specifically to implementations of the YOLO algorithm used in more common frameworks such as Tensorflow or PyTorch.