Making The Most Out of {shiny.telemetry} Using PostgreSQL & Timescale

Recently, I was tasked with setting up telemetry for various demo applications here at Appsilon and building a dashboard to monitor the generated telemetry data. Being a performance enthusiast, I aimed for optimal performance, including fast database writes and quick retrieval of aggregated data. In this blog post, I will showcase how I used Timescale with PostgreSQL to ensure the applications run efficiently while aggregating data created by these apps.

TOC:

What is Timescale?

Timescale is an open source PostgreSQL extension that aims to make working with time series data as seamless as possible with the best performance. Timescale allows you to create hyper-tables, which are clustered tables that divide data into chunks of time auto-magically; this enables fast query performance (take a look at their performance benchmark).

Timescale also provides a new kind of materialized view called a continuous aggregate. A continuous aggregate is a materialized view that updates automatically when new data is inserted. Even though this sounds like a very expensive computation, continuous aggregates are smartly configured to only update new data. Continuous aggregates use time-buckets to partition the data and update only the latest time buckets.

Timescale offers many more features but these are the main two that are of interest for our application. Hypertables will enable crazy fast reads and writes, which continuous aggregates will enable our dashboard to do the least amount of computation possible per query.

Setting up our database and optimizing configuration for high-performance telemetry handling

Even though shiny.telemetry offers a default database setup, we will create our own schema to enable the crazy fast performance we want. We will start by defining our `even_log` hyper table.

CREATE TABLE event_log (

time TIMESTAMPTZ NOT NULL,

app_name TEXT NOT NULL,

session TEXT,

type TEXT NOT NULL,

details JSONB NOT NULL

);

-- Creates a Hypertable for the event logs

SELECT create_hypertable('event_log', 'time');

Here we define our table, the main differences with the default implementation is the indication of `NOT NULL`, this will give us some guarantees at the moment of doing queries and the type of `details` from `TEXT` to `JSONB`. The change of types for `details` will enable us to make use of the JSON data that shiny.telemetry sends to our database without having to fetch said data. Then we convert our table into a hypertable by using the `create_hypertable` function provided by Timescale. This will cluster our table into different partitions using the variable `time`.

The next part requires a lot of repetition so I will only show some of what I create the continuous aggregates I needed for the dashboard. We will create a continuous aggregate that continuously aggregates (sorry for the redundancy but it is what it is) the number of logins to an app every minute.

CREATE MATERIALIZED VIEW login_count_per_minute WITH ( timescaledb.continuous ) AS

SELECT

time_bucket('1minute', time) as login_time,

app_name,

count(*) as login_count

FROM event_log

WHERE type = 'login'

GROUP BY login_time, app_name

WITH NO DATA;

This materialized view will update every time a new user logs in; since no users will login in the past then we will only need to update the latest time buckets making it really fast. In the actual code I repeat this for various intervals like 1 hour, 1 day and 1 week.

I also wanted to count how many total inputs were being registered on a particular app per different intervals, so I built the following continuous aggregate.

CREATE MATERIALIZED VIEW total_input_events_count_per_second WITH ( timescaledb.continuous ) AS

SELECT

time_bucket('1second', time) as input_time,

app_name,

count(*) as input_count

FROM event_log

WHERE type = 'input'

GROUP BY input_time, app_name

WITH NO DATA;

Finally I wanted to count how many times one particular input changed per app per interval. Once again I created a continuous aggregate that would aggregate this data. In this case I took advantage of the fact we are using JSON data instead of plain text. This way we were able to filter by details like `input_id`. We extract and group by the value of key `id` inside details when the `type` of entry is `input`-

CREATE MATERIALIZED VIEW input_events_count_per_minute WITH ( timescaledb.continuous ) AS

SELECT

time_bucket('1minute', time) as input_time,

app_name,

details#>>'{id,0}' AS input_id,

count(*) as input_count

FROM event_log

WHERE type = 'input'

GROUP BY input_time, app_name, input_id

WITH NO DATA;

I also created some refresh policies in case past data is inserted, but I will not cover it since that’s a whole other topic.

Creating an App: Efficient Database Querying for Real-time Shiny App Charts

While I will not go into the step by step of how I build the app, I will go into how I queried the database efficiently to build the charts I wanted. What did I want? I wanted a chart similar to the product in this video that would display the total input count on the app every second. Continuous aggregation makes this possible since we are not really aggregating any data when doing the query from Shiny, it’s all done in the background by timescale. So I started by building a function that would allow me to query the data and also fill the missing gaps.

build_per_second_query <- function(connection, app_name, start, end) { glue_sql( r"( SELECT time_bucket_gapfill('1second', input_time) AS input_time_fill, app_name, COALESCE(SUM(input_count), 0) AS input_count FROM total_input_events_count_per_second WHERE input_time >= {start} AND input_time <= {end} AND app_name IN ({app_name*})

GROUP BY input_time_fill, app_name

)",

.con = connection

)

}

The function shown above builds a sql query that queries the data for the app/apps in `app_name`, from `start` to `end` which fills in missing values using the function `time_bucket_gapfill` provided to us by Timescale. We also use the `COALESCE` function to replace null values with 0. I built the same sort of function for the different intervals I wanted to analyze and wrote some extra logic to actually execute the query.

to_utc <- function(ts) {

with_tz(ts, "UTC")

}

get_total_input_count <- function(connection, app_name, interval, start, end) {

query <- build_query(connection, app_name, interval, to_utc(start), to_utc(end))

dbGetQuery(conn = connection, query)

}

build_query <- function(connection, app_name, interval, start, end) {

switch(interval,

second = build_per_second_query(connection, app_name, start, end),

minute = build_per_minute_query(connection, app_name, start, end),

hour = build_per_hour_query(connection, app_name, start, end),

day = build_per_day_query(connection, app_name, start, end),

week = build_per_week_query(connection, app_name, start, end)

)

}

Inside my Shiny app I created a reactive timer that would run this query every second, looking to data in the past 120 seconds.

second_timer <- reactiveTimer(second_in_millis)

last_60_seconds <- reactive({

req(selected_app())

second_timer()

get_total_input_count(

connection,

selected_app(),

"second",

start = Sys.time() - lookback(120, "secs"),

end = Sys.time()

)

})

I then used `echarts4r` to build the chart and render it to my users.

graph_total_inputs <- function(ts, title) { ts |>

e_charts(input_time_fill) |>

e_line(input_count, name = "Total input events") |>

e_title(title) |>

e_animation(FALSE)

}

...

output$inputs_per_second <- renderEcharts4r({

graph_total_inputs(last_60_seconds(), "Last minute")

})

Successful Implementation: Real-time App Monitoring with Live Input Counts and Historical Data Analysis

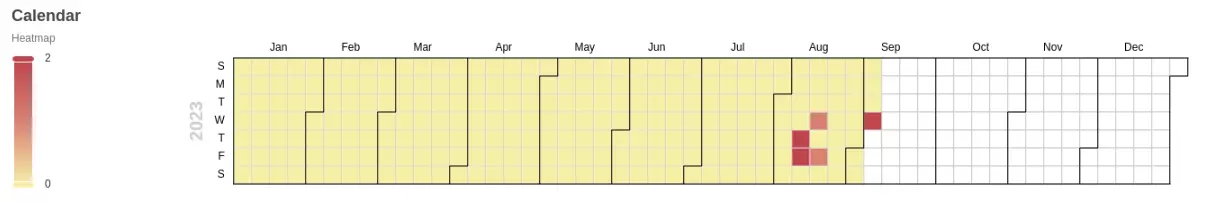

We can see we are able to see input counts on our apps live without any performance issues. We are also able to analyze historical data like historic login counts in visualizations like heatmaps, without having to process any data from R.

Conclusion

Many times when we want to write awesome data applications we are limited by the tools we know. This article is an invitation to leave your comfort zone and learn new tools that may enable you to build things you wouldn’t have been able to before. If we processed all this data from R, we would quickly run into bottlenecks. If we used base PostgreSQL, it would have taken way longer to configure partitioning and greetings jobs for continuously updating our data, with possibly worse performance. If you are dealing with live time series data I recommend you check Timescale out.

If you need assistance, feel free to reach out to one of our R/Shiny experts.