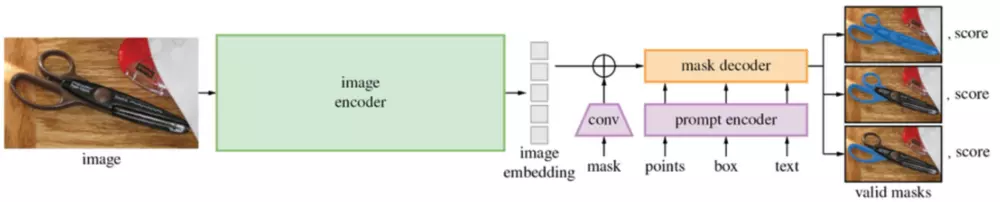

SAM, the Segment Anything Model, was recently released by Meta’s FAIR lab. It is a state-of-the-art image segmentation model aiming to revolutionize the field of computer vision. SAM is built on foundation models that have significantly impacted natural language processing (NLP) and focuses on promptable segmentation tasks, adapting to diverse downstream segmentation problems using prompt engineering. In practical applications, SAM can segment objects by simply clicking or interactively selecting points to include or exclude from the object. It can identify and generate masks for all objects present in an image automatically, and after precomputing the image embeddings, SAM can provide a segmentation mask for any prompt instantly, enabling real-time interaction with the model.

Wildlife Data Image Segmentation with SAM

The wildlife research landscape is intricately linked with effective image analysis. SAM, with its pioneering “segment-anything” philosophy, transforms this space by effortlessly tackling diverse wildlife datasets. Camera traps, pivotal in wildlife monitoring, amass vast collections of images. Traditional analysis struggles with such scale, but SAM, in its versatile approach, identifies and isolates fauna with ease. This can not only accelerate conservation measures but also showcases SAM’s potential in broader applications, from aerial wildlife surveys to granular behavioral studies, reinforcing its revolutionary impact on contemporary wildlife research.

Original image couresty of ANPN/Panthera

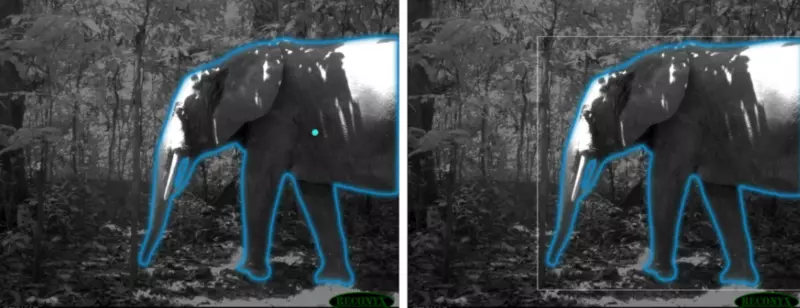

When prompted by clicks, SAM effectively eliminated foreground elements, ensuring the segmented representation was solely focused on the intended subject, be it an animal or any other point of interest. For most samples, it was able to discern between animal subjects and their environment using only a single click, demonstrating an uncanny knack for the given task.

Original image couresty of ANPN/Panthera

One of the facets of SAM that stood out was its efficiency in segmenting animals when presented with bounding boxes. This is a remarkable feature as the boxes provided were not closely tailored specifically for accurate segmentation. Yet, SAM handled them deftly, offering clear cut-outs of the animals from their environment.

Original image couresty of ANPN/Panthera

While both prompting techniques – clicking and bounding box – are distinct in their approach, the resulting segmentations turned out to be of comparable quality. This flexibility in choice of segmentation method without compromising on the outcome quality is a testament to SAM’s robustness.

Original image couresty of ANPN/Panthera

Original image couresty of ANPN/Panthera

SAM’s ability to distinguish individual entities within a crowd is laudable. Whether provided with practically crafted bounding boxes or prompted with a mere one or two clicks, the model discerned between multiple entities with finesse. This capability is particularly crucial in wildlife contexts, where discerning individual animals within a group is often required.

Original image couresty of ANPN/Panthera

Venturing into the realm of segmenting specific body parts of animals was slightly unpredictable. The model’s performance varied across different body parts, and while it wasn’t always clear which parts SAM would excel at, the results were satisfactory for the parts it did manage to segment.

Original image couresty of ANPN/Panthera

No model is without its challenges, and SAM is no exception. While its accomplishments in the wildlife domain are commendable, there are areas awaiting refinement. A closer examination of some samples reveals minor discrepancies in boundary precision. For instance, subtle details like the right ear or half of a tusk were occasionally overlooked. These nuances, while minor, underscore the potential avenues for further fine-tuning and enhancement.

Original image couresty of ANPN/Panthera

Plankton Data / Microorganism Image Segmentation with SAM

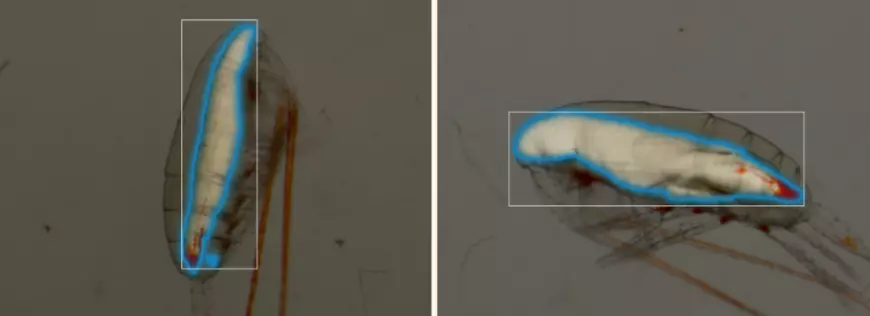

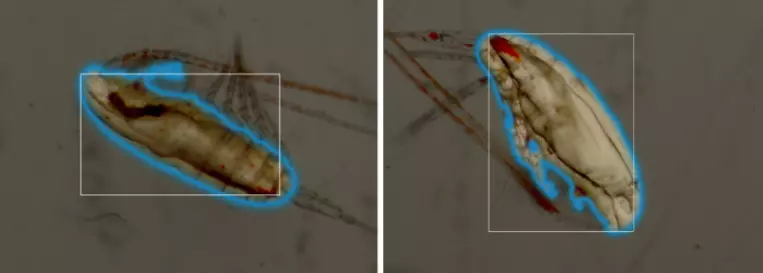

In the following section we’ll analyze how well SAM performs in lipid-sac segmentation tasks on binocular copepod data.

To effectively segment regions in plankton data, unlike wildlife images, SAM requires the plankton bodies to be vertically or horizontally aligned, samples with clear and discernible boundaries, and carefully crafted bounding boxes.

Image from Machine Learning and Plankton: Copepod Prosome and Lipid Sac Segmentation (Appsilon)

In more realistic cases, SAM completely fails to segment lipid sacs via bounding boxes.

Image from Machine Learning and Plankton: Copepod Prosome and Lipid Sac Segmentation (Appsilon)

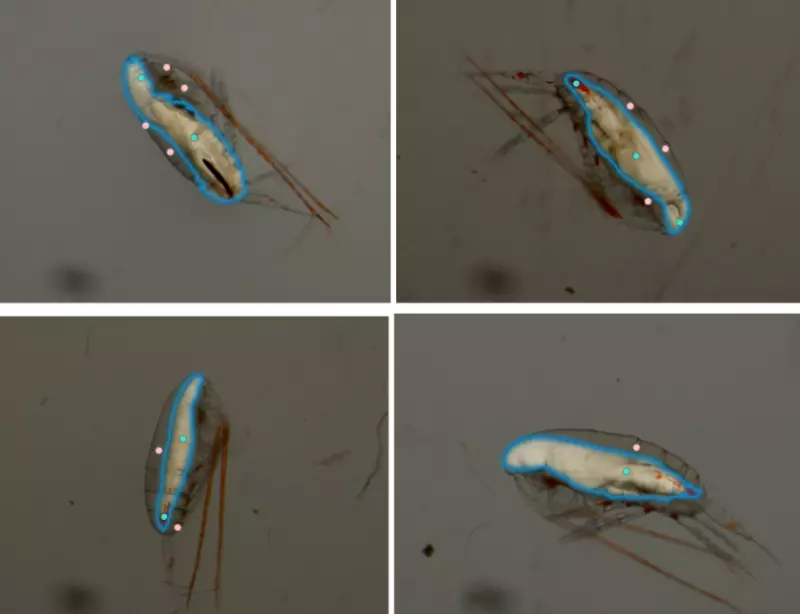

Interactive segmentation, necessitating between 2-6 clicks, emerges as a preferable method. In this approach, SAM’s segmentation capabilities manifest more consistently, delivering trustworthy results.

Image from Machine Learning and Plankton: Copepod Prosome and Lipid Sac Segmentation (Appsilon)

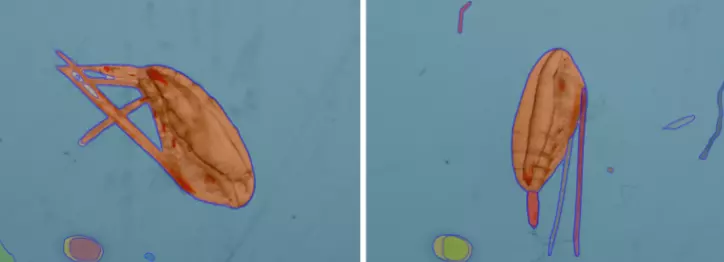

Zooming out to a broader perspective, SAM might be better suited for distinguishing entire microscopic organisms from their surroundings. Segregating subcellular components, like lipid sacs, prosomes or antenna poses a more significant challenge, suggesting SAM’s potential value lies in broader organism-level segmentation in such microscopic settings.

Image from Machine Learning and Plankton: Copepod Prosome and Lipid Sac Segmentation (Appsilon)

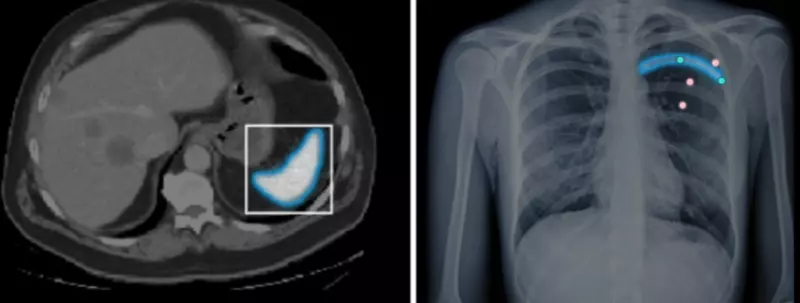

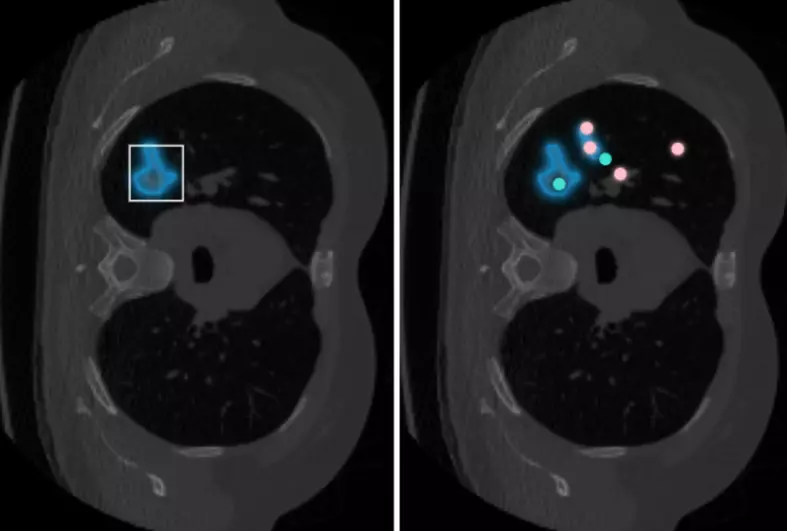

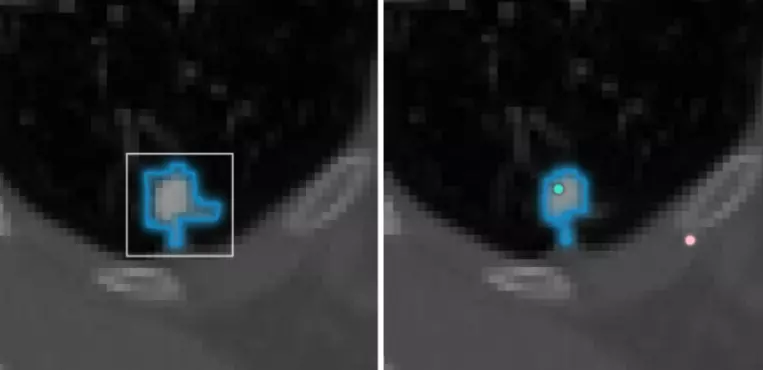

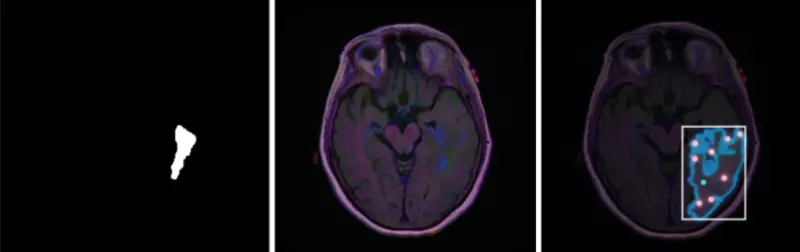

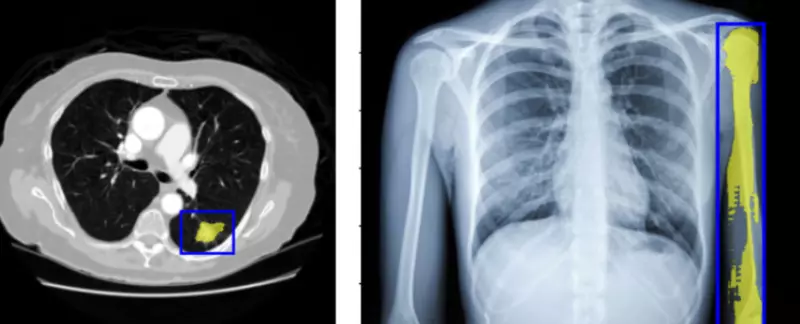

Medical Data Image Segmentation with SAM

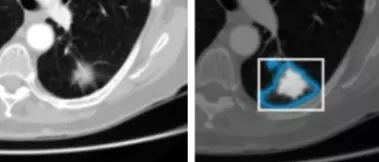

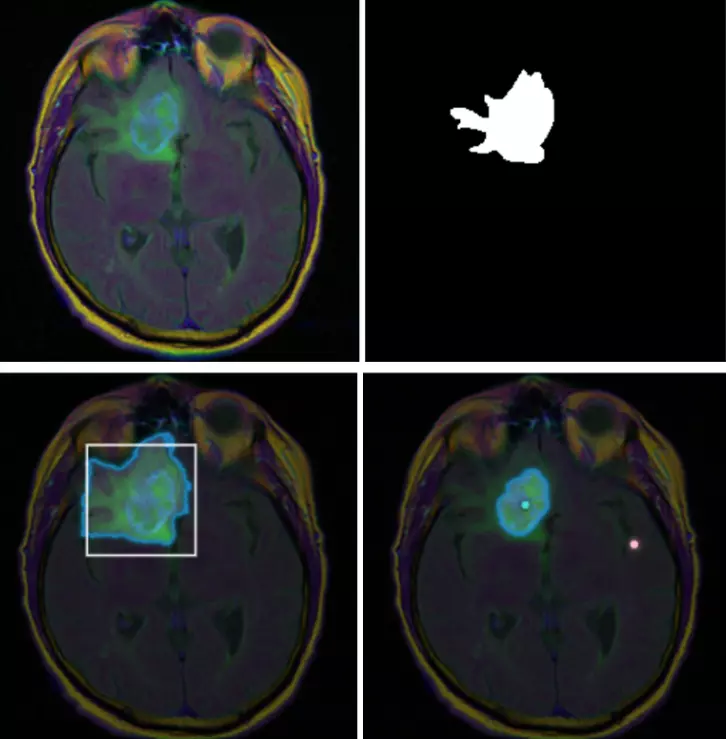

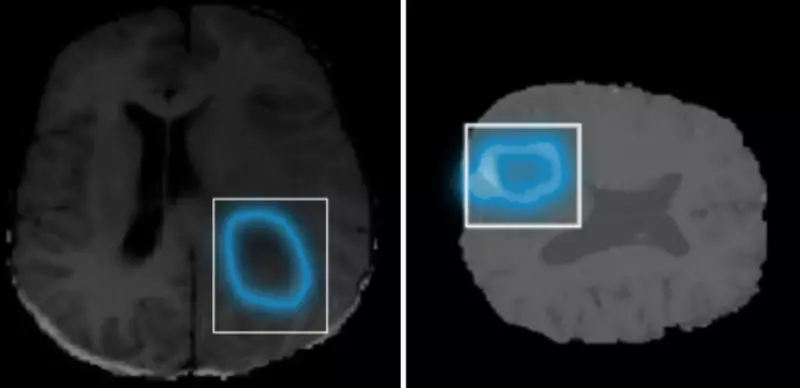

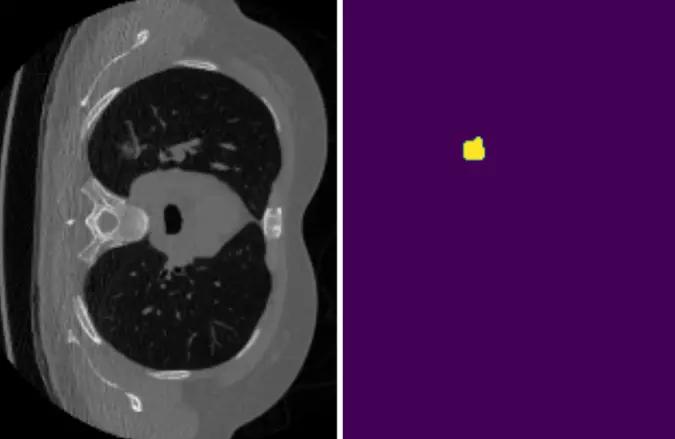

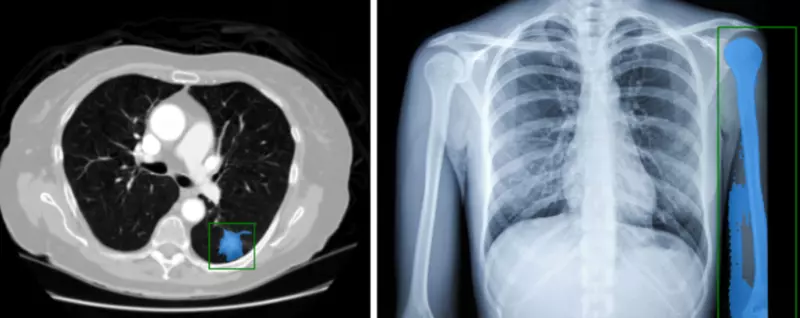

SAM’s performance in medical image segmentation (MIS) is a mixed bag. MIS is challenging due to complex modalities, fine anatomical structures, uncertain and complex object boundaries, varying optical quality of imagery and wide-range object scales. Despite these challenges, SAM has shown promising results in some specific objects and modalities. However, it has also failed in others, indicating that its zero-shot segmentation capability may not be sufficient for direct application to MIS. SAM performs better with manual hints like points and boxes for object perception in medical images, leading to better performance in prompt mode compared to everything mode. As expected, SAM seems to perform much better in easier tasks such as organ segmentation than more real world use cases such as tumor segmentation. Though the model performed decently in easier tasks, it failed miserably in more realistic cases.

In such cases the model was able to segment out the organ / bone with realistic bounding boxes OR up to 6 clicks.

SAM’s proficiency wanes when tasked with tumors. While it can discern tumors, it often renders them as indistinct blobs, failing to capture the nuanced boundaries or the extended tendrils typical of malignant growths. This limitation persists even with color-enhanced or ostensibly simpler samples.

On particularly simple or straightforward samples, SAM demonstrated consistent performance. Its segmentation was predictable when dealing with these less complex medical images.

As anticipated, SAM is helpless when faced with intricate or non-standard samples. However, there’s a silver lining: aiding SAM with preprocessed images or additional assistance can sometimes coax out better results, albeit inconsistently.

Difficult segmentation task

SAM result on difficult task

Assisted sample (zoomed in)

Results on assisted sample

For samples where even a non-expert human eye would find it challenging to discern a tumor, SAM unsurprisingly struggled to achieve any level of segmentation whatsoever.

Extensions

We explored MedSAM and SAMhq, two notable offshoots of the original SAM model. MedSAM, crafted with medical imaging in mind, and SAMhq, aiming to perfect segmentation, certainly sparked interest in many corners. While their foundational concepts and demonstrations suggest potential, our hands-on evaluation, primarily using medical data, revealed a different story. MedSAM often required meticulously crafted bounding boxes and generally underperformed SAM in our tests. SAMhq, on the other hand, displayed slight improvements on intricate boundaries but still seemed not quite ready for real-world medical deployments. Perhaps refining these models on specific datasets might sacrifice some broad applicability but could lead to much-needed performance gains.

MedSAM

Tailored for the medical imaging domain, MedSAM, rooted in SAM’s ViT-Base backbone, was developed using a comprehensive dataset of over one million image-mask pairs, representing various imaging modalities and cancer types. While their foundational concepts and demonstrations suggest potential, our hands-on evaluation, primarily using medical data, revealed a different story. MedSAM often required meticulously crafted bounding boxes and generally underperformed SAM in our tests.

SAM-hq

Created to address SAM’s perceived shortcomings in segmenting intricately structured objects, SAMhq introduces the High-Quality Output Token, aiming to elevate the mask prediction quality. Drawing from a relatively small but fine-grained dataset of 44k masks, SAMhq promises improved segmentation quality. In our own evaluations, SAMhq displayed marginal improvements on intricate boundaries but still seemed not quite ready for real-world medical deployments.

Note that these advancements underscore the intricate balance between specialization, generalization, and the resulting performance. While both MedSAM and SAMhq were built on SAM’s robust foundation, their efficacy in practical scenarios varies. The subtle enhancements seen with SAMhq and the precise demands of MedSAM provide valuable insights into the complexities of fine-tuning foundational models for specific domains or refined outputs. Perhaps a little more fine-tuning on niche datasets might help give up some level of generalizability for a necessary improvement in performance.

Discussion of SAM’s Efficacy

Within the realm of medical imaging, SAM demonstrates clear limitations. While capable in handling simpler tasks, its struggles become evident in more complex scenarios, such as distinguishing intricate tumor boundaries. Especially in cases where even human interpretation is challenged, SAM’s segmentation falls short. Its current state suggests that careful discretion is needed when considering its use in medical image segmentation, underscoring the importance of domain-specific tools for such critical applications.

While SAM introduces a transformative approach to instant segmentation with its ability to intuitively “cut out” any subject, MBAZA offers a complementary strength in rapid biodiversity monitoring with AI, even in offline settings. The fusion of SAM’s wide-ranging adaptability with MBAZA’s efficient classification may herald a new era in wildlife data processing.

In the microscopic realm, SAM presents a promising tool for distinguishing microorganisms from their milieu, particularly benefiting environmental and medical studies. While its strength lies in broad segmentation, it falls short in isolating intricate sub-cellular details. This gap is where more sophisticated plankton segmentation solutions, adept at segmenting lipid sacs and prosomes, could complement SAM. By combining SAM’s broad organism-level differentiation with another solution’s detailed sub-cellular analysis, researchers could achieve a more comprehensive and efficient microscopic image analysis, thus broadening application possibilities in diverse fields.

SAM’s “Segment Anything” philosophy signals a transformative step in image analysis. While its expansive capabilities are pioneering, the real-world implications across domains like wildlife, microscopic studies, and medical imaging present both opportunities and challenges. SAM’s versatility, its potential synergies with other technologies, and areas where it may fall short, offer a holistic view of its promise and limitations.

You can check out SAM for yourself using the demo at: https://segment-anything.com/demo .